Abstract

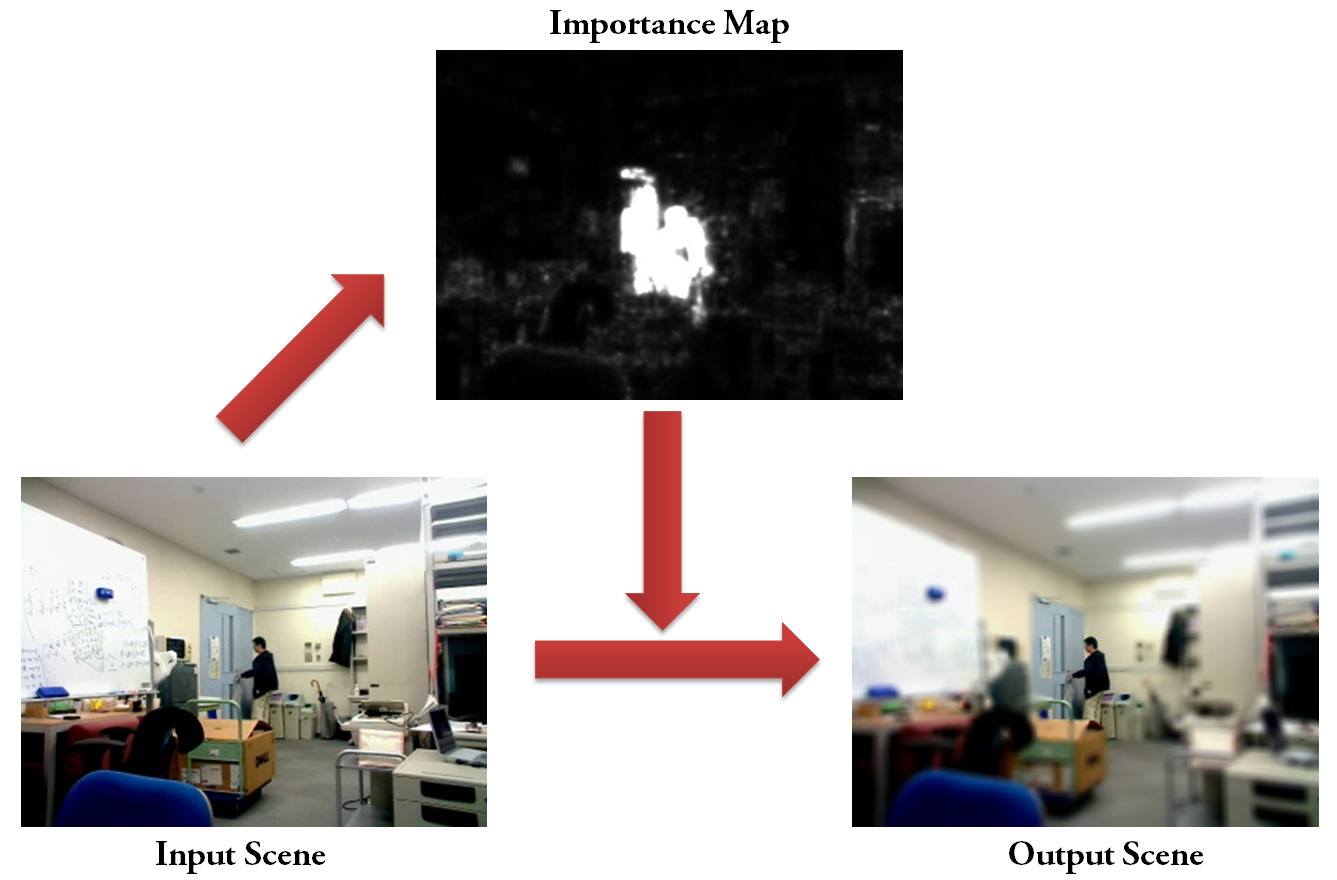

In this paper, we propose a visual guidance method for images and videos, which can automatically directs viewers' attention to important regions in low-level vision. Inspired by the modern model of visual attention, the importance map of an input scene is automatically calculated by the combination of low-level features such as intensity and color, which are extracted using spatial filters in different spatial frequencies, together with a set of temporal features extracted using a temporal filter in case of dynamic scenes. A variable-kernel-convolution based on the importance map is then performed on the input scene, to make a semantic depth of field effect in a way that important regions remain sharp while others are blurred. The pipeline of our method is efficient enough to be executed in real time on modern low-end machines, and the associated eye-tracking experiment demonstrates that the proposed system can be complementary to the human visual system.

Paper & Video

|