Hi, welcome!

This lab focuses on Signal Processing, and Interactive Media, exploring how they advance practical engineering.

The student works displayed on our homepage are representative but do not cover the full range of their skills. Please refer to their progressively updated personal webpages for a more comprehensive view of their learning and expertise.

Thanks! You can also find us on X for more content: https://x.com/lcglab

Researches

Optimization in Applications

🍩

- Optimization

- Signal Processing

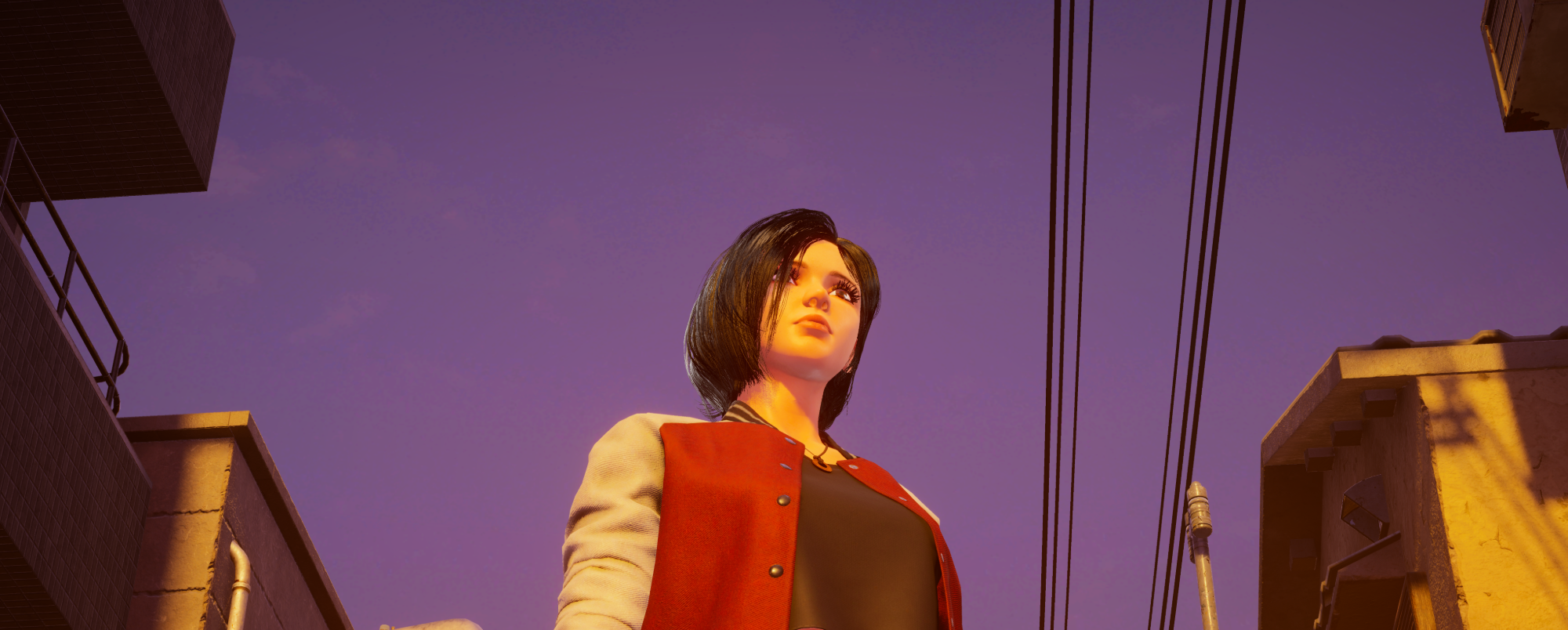

Modern Signal Processing, Computer Graphics (CG) Rendering and Applications on Interactive Facial Expressions

现代信号处理、计算机图形学(CG)渲染及其在交互式面部表情中的应用

現代の信号処理、コンピュータグラフィックス(CG)とインタラクティブ仮想表情表現への応用

🤨

Mr Takahashi, Mr Wang, Mr Fukuyama, et al.

- Emotion

- Computer Graphics (CG)

- Signal Processing

- Internet of Things

- Human Computer Interface

- Natural Language Processing

- Game Design & Animation Filmmaking

Modern interactive facial expression technology, utilizing signal processing, natural language processing, facial movement coding, and graphics rendering, enables virtual characters to accurately convey emotions, redefining realism and immersive experiences.

现代交互式面部表情技术通过信号处理、自然语言处理、面部动作编码和图形学渲染,让虚拟角色准确传递情感表达,重塑真实感与沉浸体验。

現代のインタラクティブ表情技術は、信号処理、自然言語処理、顔面動作符号化、グラフィックスレンダリングを活用し、仮想キャラクターが感情を正確に伝えることを可能にし、没入感とリアリズムを再構築します。

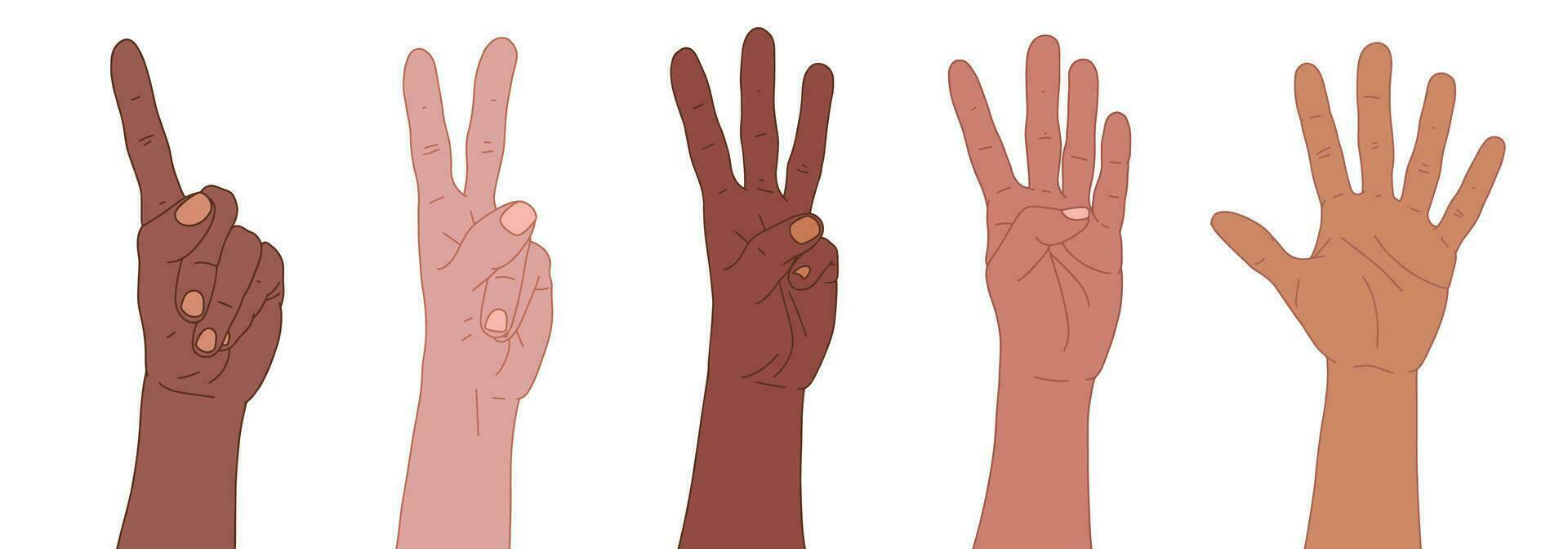

Augmenting and Generating Human Motion and Body Language via Inverse Kinematics (IK) and Rendering

基于逆运动学(IK)与渲染技术的人体运动与身体语言的增强及生成

逆運動学(IK)とレンダリング技術による人体動作とボディランゲージの拡張と生成

👉

Mr Wang, Mr Faraz, Mr Fukuyama, et al.

- Inverse Kinematics (IK)

- Accesibility

- Emotion & Motion

- Internet of Things

- Computer Vision

- Rendering

We explore how to use signal processing, inverse kinematics (IK) and rendering technology to augment and generate body language, thereby creating more natural and realistic language expressions for human beings.

我们探索如何利用信号处理、逆向运动学(IK)与渲染技术来增强和生成肢体语言,从而为人类创造出更自然、更逼真的表达方式。

信号処理、逆運動学(IK)、レンダリング技術を活用して、ボディランゲージを強化および生成し、より自然でリアリスティックな人間の言語表現を創出する方法を探求します。

Our published paper:

Wang, Binghao, Jing Lei, and Xiang Li. "Inverse Kinematics-Augmented Sign Language: A Simulation-Based Framework for Scalable Deep Gesture Recognition." Algorithms 18.8 (2025): 463.

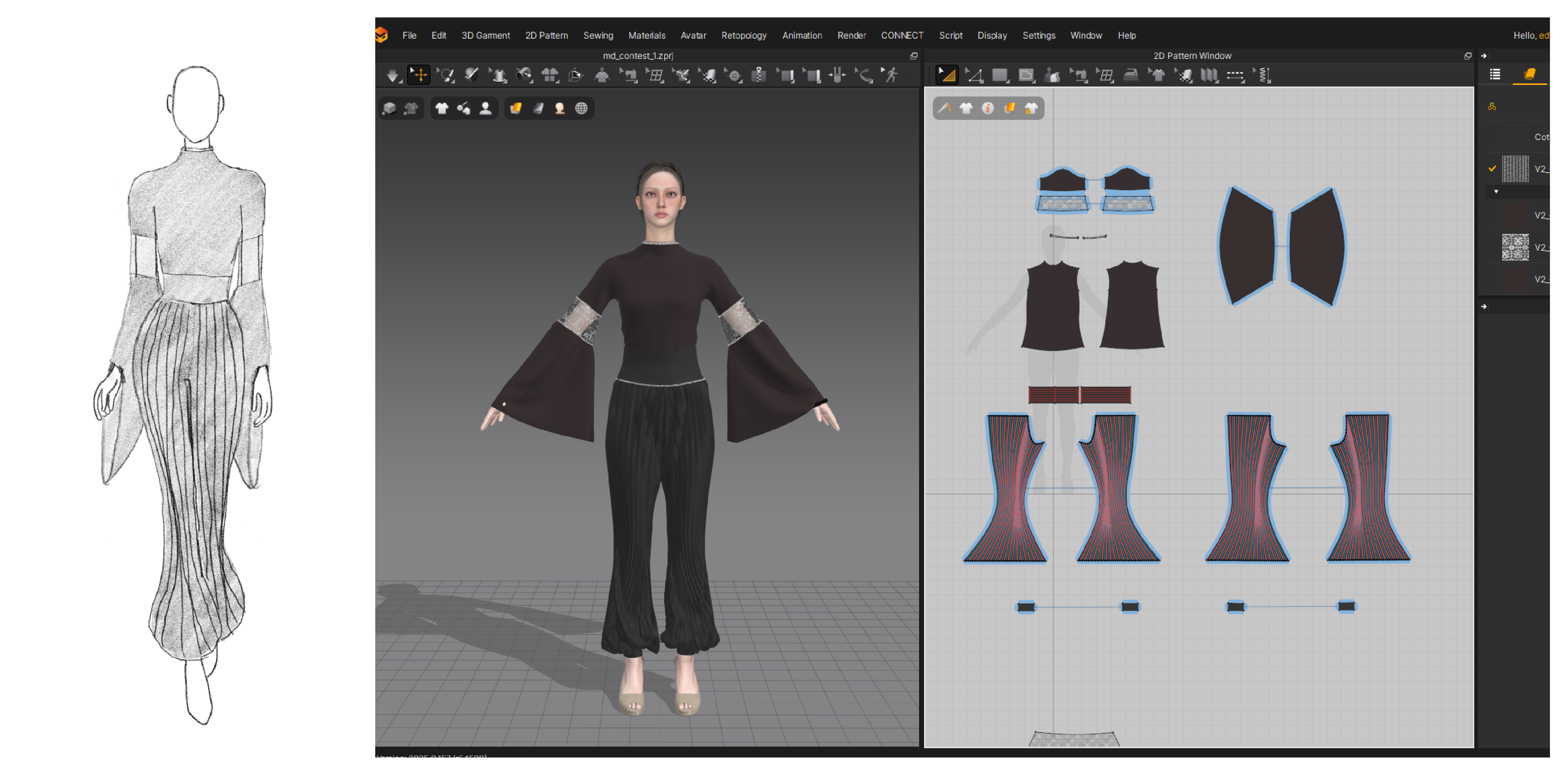

Animation Film-Making with Emotionally Expressive Characters and Costume Design

动画电影制作中情感表现力角色与服装设计

アニメ映画における情感表現豊かなキャラクターと衣装デザイン

🎬

Miss Hayashi & Miss Hamada

- Animation Filmmaking

- Emotion

- Computer Graphics (CG)

- Character Design

Emotionally expressive animated characters with costume design created with advanced techniques and coding bring stories to life, deepening audience connection and narrative impact.

通过先进技术与编程实现情感表达、服饰精美的动画角色,为故事注入生命力,能显著增强观众的情感共鸣与叙事感染力。

先進の技術とコーディングによって創り出された、感情表現豊かで衣装デザインも精巧なアニメーションキャラクターは、物語に命を吹き込み、観客の感情的な繋がりと物語の影響力を深めます。

Interactive Study-With-Me Game Featuring Emotional Soundscapes

沉浸式Study-With-Me游戏:情感化声景体验

感情的な音響演出を取り入れたインタラクティブなStudy-With-Meゲーム

🎧🎮

Miss Akutsu

- Emotion

- Music

- SFX

- Game Design

"Study-With-Me" immersive games integrate emotional soundscapes to boost focus, reduce stress, and make learning more engaging and enjoyable.

“Study-With-Me”类沉浸体验游戏融合情感化声景,以提升专注力、减轻压力,使学习过程更具吸引力和乐趣。

「Study-With-Me」没入型ゲームは、感情に働きかけるサウンドスケープ(音景)を統合し、集中力の向上、ストレスの軽減を図り、学習をより魅力的で楽しいものにします。

Project PageContact

lixiang.dev[at]gmail.com