The Hearing Robot

The ultimate goal of this project will be the development of a multimedia

interactive autonomous mobile robot.

Within the first phase development,

the robot will be able to localize and track sound sources in any complex environment,

have the abilities of separating target sound from environment noise,

and understand environment by sounds,

e.g., human voice, sound of walk steps, siren and crash.

The robot will be able to avoid obstacles and move to a destination autonomously.

Visual and auditory interface will be provided.

- Multi-purpose

The purpose of this project is to develop a multipurpose robot

which can be used in several ways.

For example, the robot can serve as a guard robot and also can be used

as an agent robot which can attend a meeting instead of its user

so that the user can get the remote visual scenes of the meeting room

as well as the auditory scenes.

- Multi-mode

Considering a guard robot patrolling around our campus,

we would need the robot be able to work in two different modes.

One is the autonomous mode, the robot will automatically move

around the campus and check for possible suspicious objects.

The other one is the remote control mode.

When the robot has found some suspicious objects,

the robot will be controlled by its owner who will order the robot

to approach the objects and check more details about the objects.

However, recent researches on mobile robot were almost concentrated

on the autonomous aspect. In this project, we will provide the robot

the interactive mode as well as the autonomous mode.

- Multi-sensor

The multimodal robot must be capable of treating multimedia resources,

especially sound media to complement with vision.

Visual sensor is one of the most popular sensors used today for

mobile robots.

Since a robot generally looks at the external world from a camera,

difficulties occur when a object does not exist in the visual field

of the camera or when the lighting condition is poor.

A robot cannot detect a nonvisual event which in many cases may,

however, be accompanied by a sound emission.

In these situations, the most useful information is given by audition.

Audition is one of the most important senses

used by humans and animals to recognize their environments.

Although the spatial resolution of audition is relatively low

compared with that of vision,

the auditory system can complement and cooperative with vision systems.

For example, sound localization can enable the robot to direct its camera

to a sound source.

- Base Platform: The robot is based on the LABO-3 platform of

Applied AI Systems, Inc.

The LABO-3 platform is a wheel-based mobile robot.

It is equipped with infrared and tactile sensors for obstacle avoidance,

and a micro-processor for steering control and sensor signal processing.

It is driven by two-wheel differential steering.

- Auditory Sensors: Four microphones are arranged in the surface of a sphere,

where three microphones (x,y,z) in a same horizontal plane with a shape of

regular triangle, and the fourth microphone (o) arranged in the center

with a height so that the four microphones (o-xyz) form Cartesian coordinates

(i.e. ox-oy-oz in right angles). The diameter of the sphere is about 30 cm.

- Vision Sensor: A fixed directional CCD camera is mounted

on the front of the robot. The camera is planned to be upgrade

to a active camera system with a pan/tilt servo platform.

- Communication: A radio bi-directional modem is used

for host-robot communication. Four FM transmitters and one video transmitter

are used for audio and video signal transmission respectively.

- Audio4-USB: DSP-based data acquisition system

with a Motorola DSP56307

(24bit fixed-point) processor.

It has four channels audio input and output

and connects to hte host PC via USB interface.

- TriMedia DSP Board: PCI plug-in DSP board

with a

TriMedia TM1300 DSP processor (Philips) for general digital video,

audio, graphics and telecommunications processing.

It supports video and audio input/output.

Useful Auditory Functions

- Sound Localization

- Auditory Scene Analysis:

Talking to other persons in a noisy room,

walking in the street, the auditory system faces the problem of

separating complex sound signals into different sound streams.

In spite of the difficulties that the acoustic events produced

by different sources may overlap in time and frequency domain

and may have same major characteristics,

the human auditory system can identify and separate the sources without effort.

What methods are needed in the human auditory system?

As a mathematic model, the task of separating multiple sources are

discribed as of solving a multiple input and multiple output system

by only knowing the output but without knowing the input and the system.

Although there are now some algorithm to solve the problem based on

the assumption that the inputs of different channels are mutually independent.

However, this method needs much computation especially

when the positions of sound sources and receivers are time variant.

One big difference is this method can not separate the inputs from a same position.

Obviously, the algorithm does not satify the requirment in the auditory system.

In this project, we will give a computational model

for the primary sound organization stage of the auditory system.

Algorithms will be constructed based on the spatial cues

and the discovered psychoacoustic cues, e.g. harmonics, time continuance,

synchronized AM, FM and others sound features.

- Sound Understanding:

Sound understanding is important for an autonomous robot to recognize its environment.

This is especially true in our research because we use the auditory system

as the major part of the sensing system.

Here, we will not focus on speech recognition which refer to the

understanding of the meaning from a spoken language. Since it is

the first step for sound understanding, we will restrict the target

to some special sounds, e.g. sound of human voice, phone bell, door

knocking, door open, walk step, siren, crash, and so on. Sound

understanding will enables the robot to act in response to what is

happening. For sound understanding, any existing machine learning

technology can be used. Examples include neural networks, decision

trees and decision rules. In general, neural networks are good at

learning in changing environments, and decision trees (or rules) are

good at selecting useful features for recognition. We are going to

combine both symbolic and non-symbolic approaches, to make use of the

advantages of both sides.

- All-directional: Compared to vision, audition is all-directional.

When a sound source emits energe the sound fills the air,

and a sound receiver (microphone) receives the sound evergy from all directions.

Some specialized cameras can also receive an image from all directions,

but still have to scan the total area to locate a specific object.

Audition mixes the signals into a one-dimensional time series,

making it easier to locate any urgent or emergency situation.

- No Necessary of Illumination:

Audition requiring no illumination,

enables a robot to work in darkness or low light.

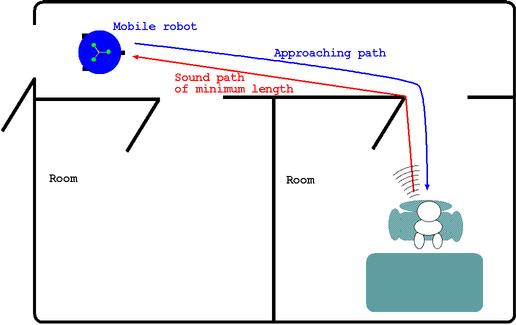

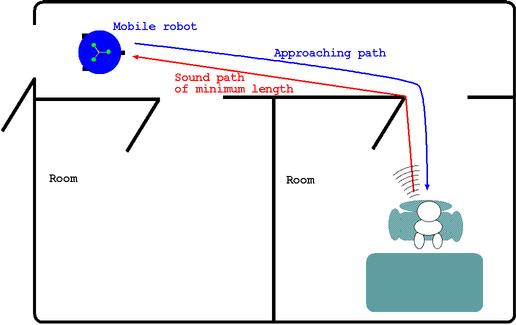

- Less Effected by Obstacles:

Audition also is less effected by obstacles.

So, a robot can perceive audition information from sources behind obstacles.

One example is to localize a sound source outside of a room or around a corner.

The robot will first localize the sound source in the area of the door/corner,

and then travel to that point and listen again, finally locating the sound source.

Reference

- Huang, J. and Supaongprapa, T. and Terakura, I and Wang, F. and

Ohnishi, N. and Sugie, N.,

"A Model Based Sound Localization System and Its Application to

Robot Navigation",

Robotics and Autonomous Systems (Elsevier Science),

Vol.27, No.4, pp.199-209, 1999.

- Huang, J. and Ohnishi, N. and Sugie, N.,

"Building Ears for Robots: Sound Localization and Separation",

Artificial Life and Robotics (Springer-Verlag),

Vol.1, No.4, pp.157-163, 1997.

[Return to home page]

last modified: 16 Augest 2000 by Jie Huang

(j-huang@u-aizu.ac.jp)