Introduction

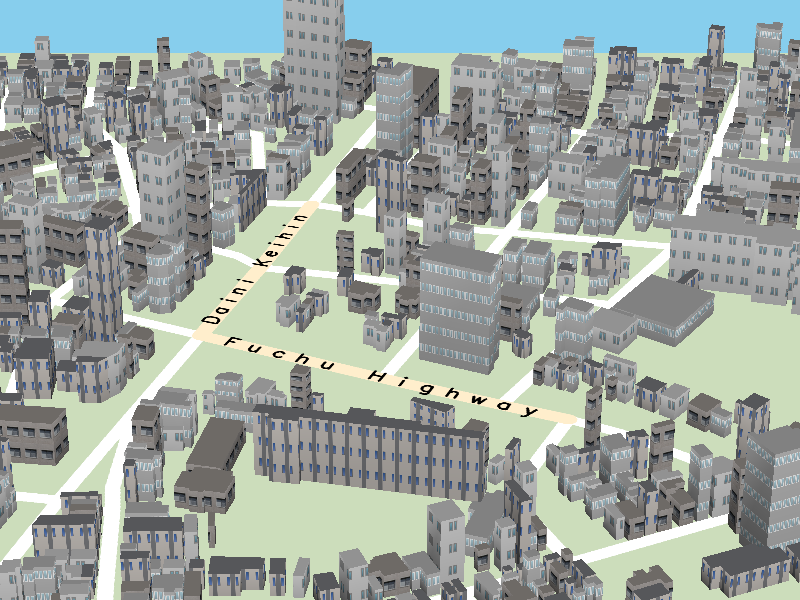

In composing hand-drawn 3D urban maps, the most common design problem is to avoid overlaps between geographic features such as roads and buildings by displacing them consistently over the map domain. Nonetheless, automating this map design process is still a challenging task because we have to maximally retain the 3D depth perception inherent in pairs of parallel lines embedded in the original layout of such geographic features. This paper presents a novel approach to disoccluding important geographic features when creating 3D urban maps for enhancing their visual readability. This is accomplished by formulating the design criteria as a constrained optimization problem based on the linear programming approach. Our mathematical formulation allows us to systematically eliminate occlusions of landmark roads and buildings, and further controls the degree of local 3D map deformation by devising an objective function to be minimized. Various design examples together with a user study are presented to demonstrate the robustness and feasibility of the proposed approach.

Constraints

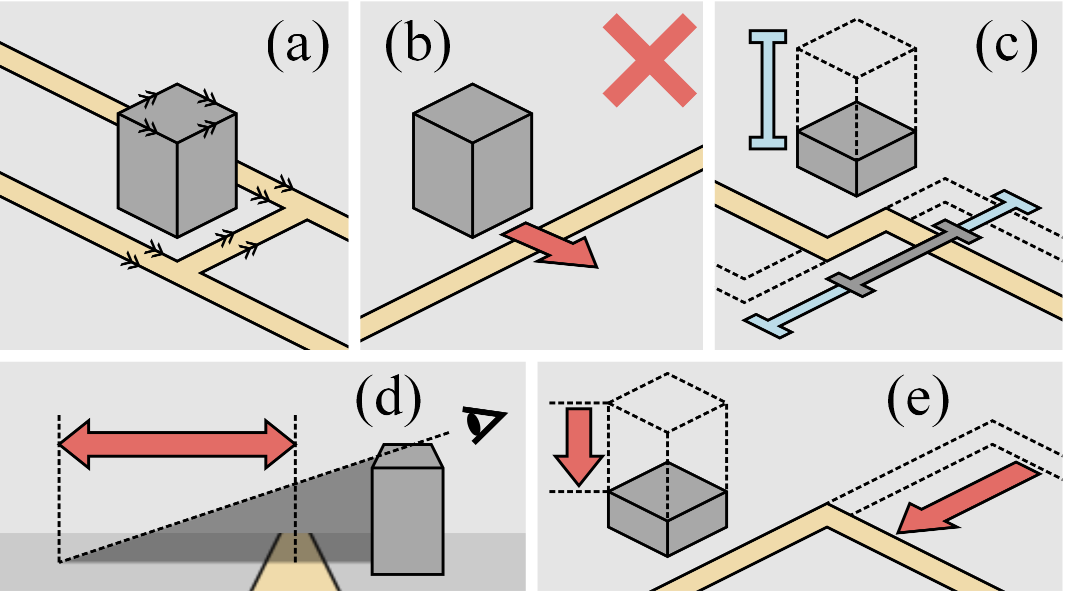

There are five design criteria decided to be employed for improving the map readability, including Fixed orientation (Figure 1(a)), Relative position (Figure 1(b)), Scale limits (Figure 1(c)), Minimum displacement (Figure 1(d)), and Occlusion avoidance (Figure 1(e)),

Note that the first three criteria are employed for keeping the con- sistency in the arrangement of geographic features and formulated as hard constraints, while the last two are introduced as soft constraints for enhancing the reality and readability of 3D urban maps, respectively.

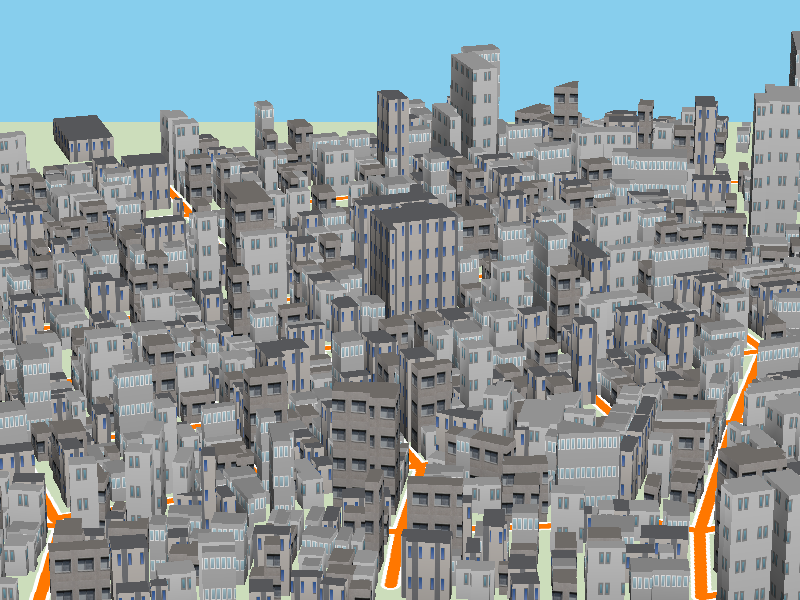

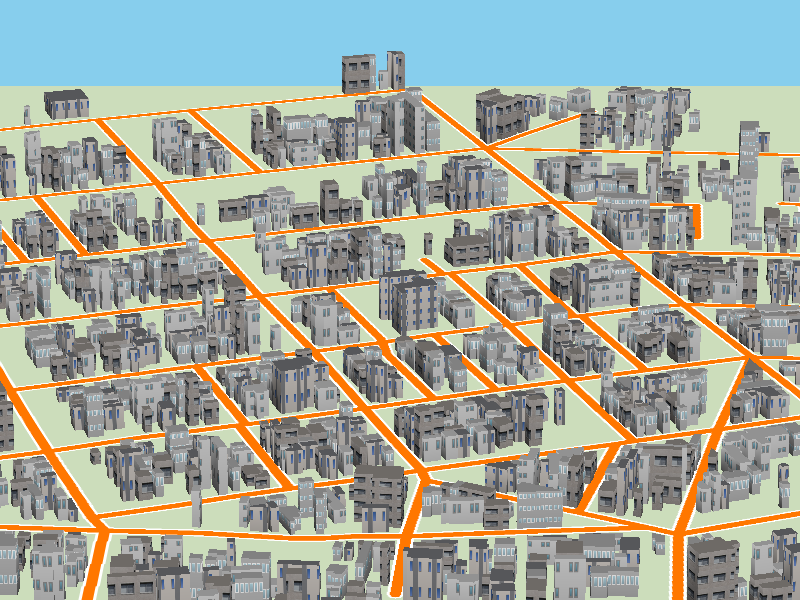

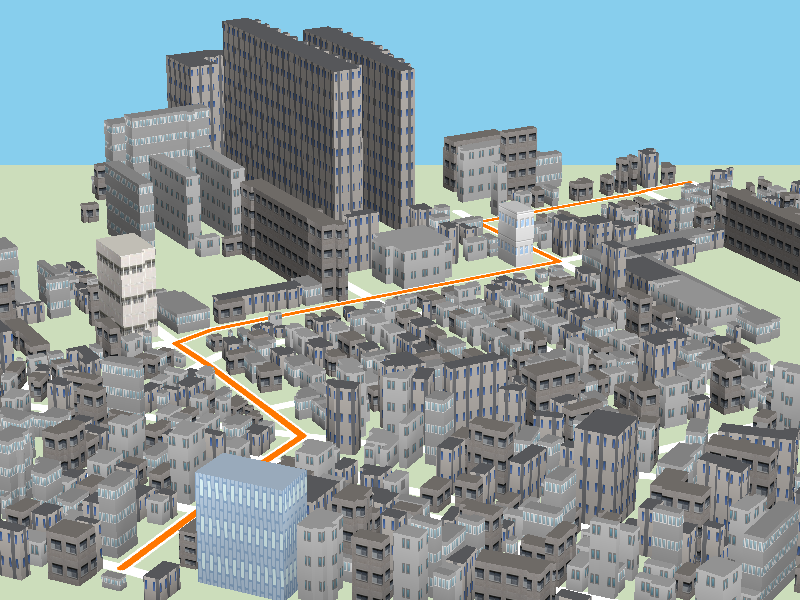

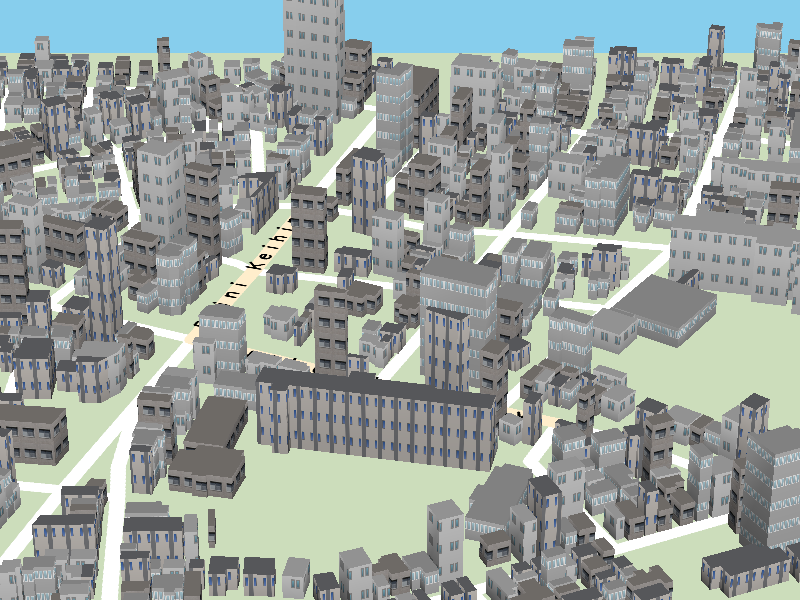

Result

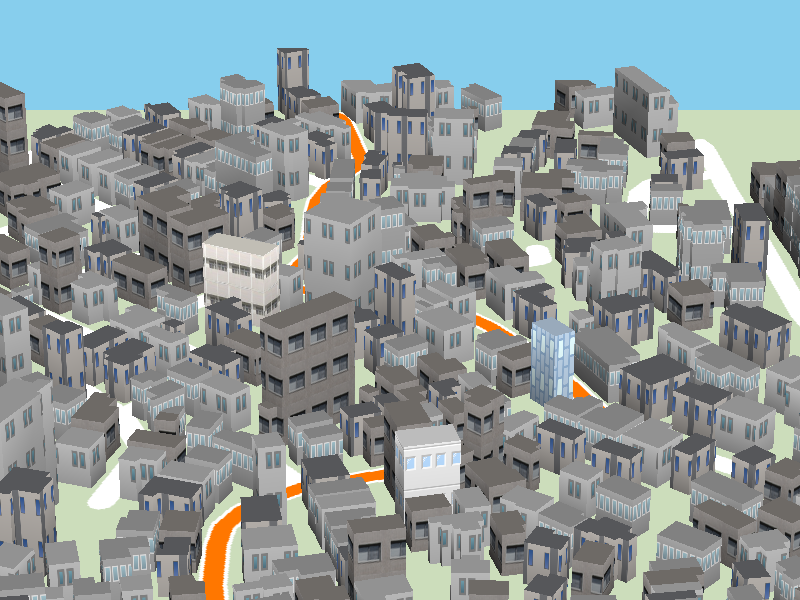

(a)

(b)

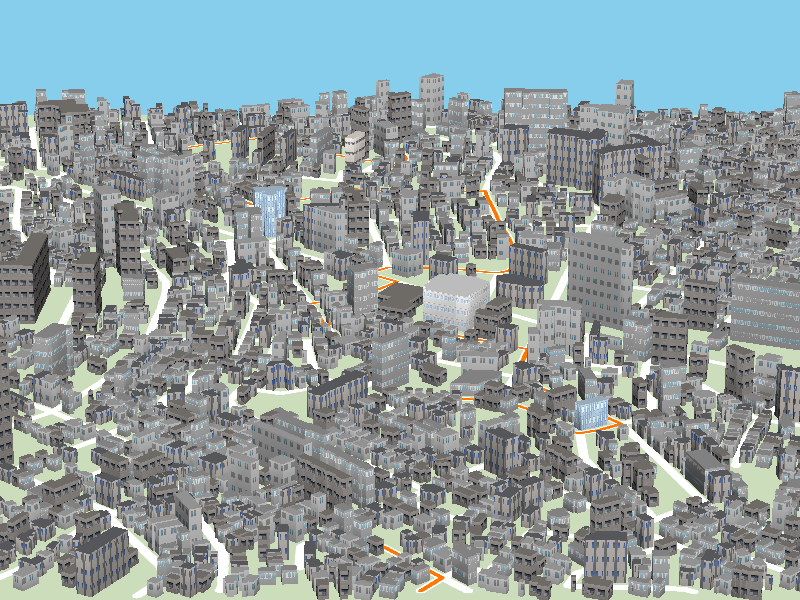

(a)

(b)

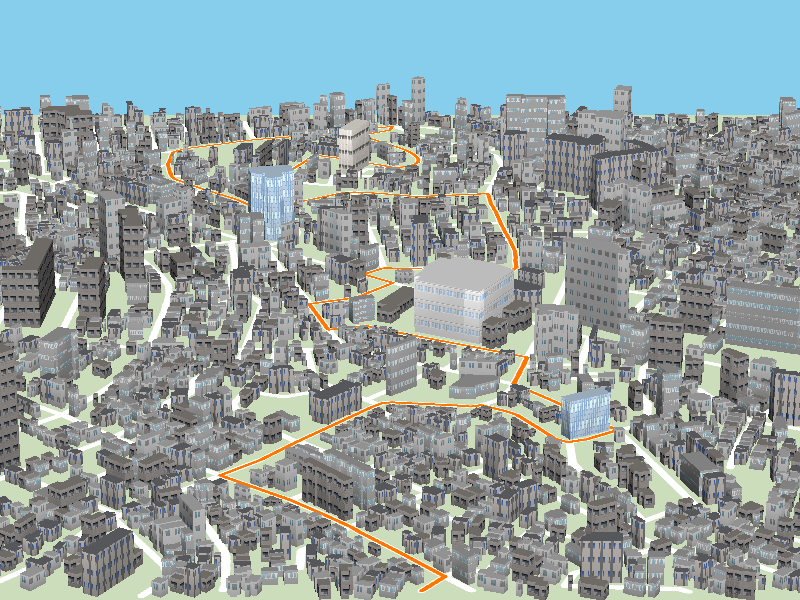

(a)

(b)

(a)

(b)

(a)

(b)

Paper

Acknowledgments

3D urban models of Japan provided by ZENRIN CO., LTD and digital road map databases of Japan provided by Sumitomo Electric Industries, Ltd are used as the joint research (No. 398) using spatial data provided by Center for Spatial Information Science, the University of Tokyo. This work has been partially supported by JSPS under Grants-in-Aid for Scientific Research (B) No. 24330033.