Neuromorphic Intelligence for

Anthropomorphic Robots

Principal Investigators: Abderazek Ben Abdallah

(PI), Zhishang Wang (PI)

We investigate next‑generation

adaptive distributed autonomous systems

through the lens of anthropomorphic

prosthetics, androids, and intelligent robotic

platforms. Our research integrates

cutting‑edge neuroscience, artificial

intelligence, neuromorphic computing, and

robotics to create highly responsive, lifelike

systems capable of operating autonomously

while adapting to human intent and dynamic

environments. Leveraging neuromorphic

architectures and spiking neural networks, we

develop control frameworks that enable

natural, intuitive interaction between

artificial limbs, androids, and biological

systems. These brain‑inspired models support

real‑time adaptation, low‑power operation, and

seamless communication across distributed

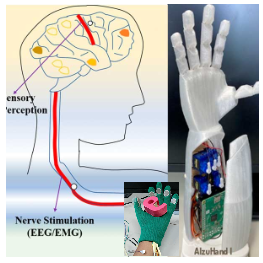

components. Our work on non‑invasive neural

interfaces allows prosthetic devices to adjust

continuously to user intent, improving

precision, comfort, and fluidity of motion. In

parallel, our research on advanced sensory

processing equips androids with human‑like

perceptual capabilities, enabling them to

interpret complex environmental stimuli,

collaborate with humans, and function

autonomously within distributed multi‑agent

settings.

|

AIzuHand I

Weight: 422g | DoF: 5 | Mode: AN/SN

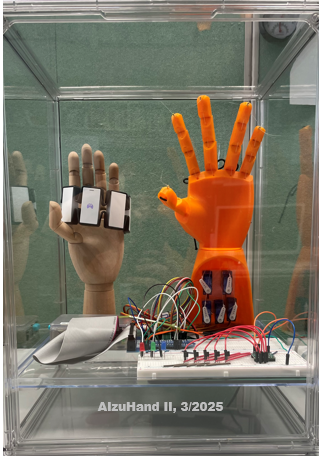

AIzuHand H

Weight: 492g | DoF: 7 | Mode: AN/SN

AIzuHand II

DoF: 7 | Feedback: Temp | Mode: AN

|

AIzuAnthro: A

Distributed Anthropomorphic Humanoid Robotics

Platform for Multi‑Domain Applications

AIzuAnthor is a next‑generation distributed

platform of anthropomorphic humanoid robots

designed to operate collaboratively across

diverse and demanding environments. Built on

advanced neuromorphic control architectures,

the system enables human‑like motion, adaptive

behavior, and emergent cognitive capabilities

inspired by biological neural processes. The

platform integrates neuro‑inspired perception,

decision‑making, and motor control to achieve

fluid, realistic interactions and robust

autonomy. Its distributed design allows

multiple humanoid units to coordinate

seamlessly, share sensory information, and

execute complex tasks that exceed the

capabilities of a single robot. AIzuAnthor

targets a wide spectrum of high‑stakes

applications — from critical mission support

in space exploration, to firefighting and

disaster response, to field operations in

hazardous or inaccessible environments. By

combining anthropomorphic embodiment with

neuromorphic intelligence, the project aims to

establish a versatile, resilient, and

human‑compatible robotic ecosystem for the

next era of intelligent machines.

|

Related Publications

- Ayato Murakami, Lance Deniel Suarez Malihan, Zhishang Wang, and Abderazek Ben Abdallah, "Activity-Normalized Dynamic Thresholding for Fault-Tolerant Spiking Neural Networks", ETLTC2026, January 19-25, 2026

- Zhishang Wang, Yassine Mohamed Khedher, Khanh N. Dang, Michael Cohen and Abderazek Ben Abdallah, "Analytical Modeling of Task Allocation for Distributed Anthropomorphic Robots in Mission-Critical Environments," 2025 IEEE 18th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), Singapore, Dec. 15-18, 2025.

- Cheng Hong, Sinchhean Phea, Khanh N. Dang, Abderazek Ben Abdallah, "The AIzuHand Neuromorphic Prosthetic Hand," ETLTC2023, January 24-27, 2023

- Sinchhean Phea, Abderazek Ben Abdallah, "An Affordable 3D-printed Open-Loop Prosthetic Hand Prototype with Neural Network Learning EMG-Based Manipulation for Amputees," ETLTC2022, January 25-28, 2022

|

|